Team:Valencia Biocampus/talking

From 2012.igem.org

Cristina VS (Talk | contribs) (→Light Mediated Translator (LMT)) |

|||

| (183 intermediate revisions not shown) | |||

| Line 4: | Line 4: | ||

{{:Team:Valencia_Biocampus/menu2}} | {{:Team:Valencia_Biocampus/menu2}} | ||

| - | < | + | <ol> |

| - | + | ||

<br> | <br> | ||

| + | <center> | ||

<div id="Titulos"> | <div id="Titulos"> | ||

<h2>Talking Interfaces</h2> | <h2>Talking Interfaces</h2> | ||

| + | <!-- ya cambiaré esto de sitio pero no lo borréis please!--> | ||

<br> | <br> | ||

| + | <div id="PorDefecto"> | ||

| + | === '''THE PROCESS''' === | ||

| + | <br> | ||

| + | |||

| + | The main objective of our project is to accomplish a '''verbal communication''' with our microorganisms. | ||

| + | To do that, we need to establish the following process: | ||

| + | <br> | ||

| + | <html> | ||

| + | |||

| + | <!-- FLASH--> | ||

| + | |||

| + | </html> | ||

| + | |||

| + | |||

| + | <html> | ||

| + | <img src="https://static.igem.org/mediawiki/2012/2/29/Esquema_interfaces.PNG" height="450" width="780"> | ||

| + | </html> | ||

| + | |||

| + | <ol><br> | ||

| + | <li>The input used is a ''voice signal'' (question), which will be collected by our '''voice recognizer'''. | ||

| + | <li>The '''voice recognizer''' identifies the question and, through the program in charge of establishing the communication, its corresponding identifier is written in the | ||

| + | assigned port of the arduino. | ||

| + | <li>The software of the ''arduino'' reads the written identifier and, according to it, the corresponding port is selected, indicating the flourimeter which wavelength has | ||

| + | to be emitted on the culture. There are '''four possible questions''' ('''q'''), and each of them is associated to a different wavelength. | ||

| + | <li>The fluorimeter emits light ('''BioInput'''), exciting the compound through optic filters. | ||

| + | <li>Due to the excitation produced, the compound emits fluorescence ('''BioOutput'''), which is measured by the fluorimeter with a sensor. | ||

| + | <li>This fluorescence corresponds to one of the four possible answers ('''r''': response). | ||

| + | <li>The program of the ''arduino'' identifies the answer and writes its identifier in the corresponding port. | ||

| + | <li>The communication program reads the identifier of the answer from the port. | ||

| + | <li>"Espeak" emits the answer via a voice signal ('''Output'''). | ||

| + | </ol> | ||

| + | <br><br> | ||

| + | You can see an explicative animation of this process here:<br><br> | ||

| + | <html><center> | ||

| + | <object classid="clsid:D27CDB6E-AE6D-11cf-96B8-444553540000" codebase="http://download.macromedia.com/pub/shockwave/cabs/flash/swflash.cab#version=4,0,2,0" width="550" height="300" align="middle"> | ||

| + | <param name=wmode value="transparent"> | ||

| + | <param name=movie value="https://static.igem.org/mediawiki/2012/0/06/Animacion_ordenador.swf"> | ||

| + | <param name=quality value=high> | ||

| + | <embed src="https://static.igem.org/mediawiki/2012/0/06/Animacion_ordenador.swf" wmode=transparent quality=high pluginspage="http://www.macromedia.com/shockwave/download/index.cgi?P1_Prod_Version=ShockwaveFlash" type="application/x-shockwave-flash" width="550" height="300" align="middle"> | ||

| + | </embed> | ||

| + | </object> </center> | ||

| + | </html> | ||

| + | <br><br><br> | ||

| + | |||

| + | |||

| + | <br> | ||

| + | In the following sections we analyse in detail the main elements used in the process: | ||

| + | <ul> | ||

| + | <li><html><a href="https://2012.igem.org/Team:Valencia_Biocampus/talking#Voice_recognizer"><b>Voice recognizer</b></a></html> | ||

| + | <li><html><a href="https://2012.igem.org/Team:Valencia_Biocampus/talking#Arduino"><b>Arduino</b></a></html> | ||

| + | <li><html><a href="https://2012.igem.org/Team:Valencia_Biocampus/talking#Fluorimeter"><b>Fluorimeter</b></a> | ||

| + | <li><html><a href="https://2012.igem.org/Team:Valencia_Biocampus/talking#Light_Mediated_Translator_.28LMT.29"><b>Light Mediated Translator</b></a> | ||

| + | </html> | ||

| + | </ul> | ||

| + | <br> | ||

| + | |||

| + | <!-- | ||

| + | %%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% | ||

| + | --> | ||

| + | |||

| + | === '''Voice recognizer''' === | ||

| + | |||

| + | <html> | ||

| + | <center> | ||

| + | <br> | ||

| + | <iframe width="420" height="315" src="http://www.youtube.com/embed/nhM99FoQYSg" frameborder="0" allowfullscreen></iframe> | ||

| + | <br> | ||

| + | </center> | ||

| + | </html> | ||

| + | |||

| + | |||

| + | '''Julius''' is a continuous ''speech real-time recongizer'' engine. It is based on '''Markov's interpretation''' of hidden models. It's opensource and distributed with a ''BSD licence''. Its main platfomorm is '''Linux''' and other '''Unix systems''', but it also works in '''Windows'''. It has been developed as a part of a free software kit for research in large-vocabulary continuous speech recognition (''LVCSR'') from 1977, and the Kyoto University of Japan has continued the work from 1999 to 2003. | ||

| + | <!-- | ||

| + | '''Julius''' es un motor de reconocimiento de habla continua en tiempo real basado en la interpretación de ''Modelos Ocultos de Markov''. Es de código abierto y se distribuye con licencia BSD. Su principal plataforma es Linux y otros sistemas Unix, también funciona en '''Windows'''. Ha sido desarrollado como parte de un kit de software libre para investigación en Reconocimiento de habla continua de amplio vocabulario (''LVCSR'') desde 1977 y el trabajo ha sido continuado por la Kyoto University de Japón de 1999 hasta el 2003.--> | ||

| + | |||

| + | In order to use '''Julius''', it is necessary to create a language model and an acoustic model. Julius adopts the acoustic models and the pronunctiation dictionaries from the ''HTK software'', which is not opensource, but can be used and downloaded for its use and posterior generation of acoustic models. | ||

| + | <!-- | ||

| + | Para usar Julius es necesario crear un modelo de lenguaje y un modelo acústico. Julius adopta los modelos acústicos y los diccionarios de pronunciación del software HTK, que a diferencia de Julius no es opensource, pero que puede ser usado y descargado para su uso y posterior generación de los modelos acústicos. | ||

| + | --> | ||

| + | <br><br> | ||

| + | <b> Acoustic model </b> | ||

| + | ---------------------------------- | ||

| + | An acoustic model is a file which contains an statistical representation of each of the different sounds that form a word (phoneme). Julius allows voice recognition through continuous dictation or by using a previously introduced grammar. However, the use of continuous dictation carries a problem. It requires an acoustic model trained with lots of voice files. As the amount of sound files containing voices and different texts increases, the ratio of good hits of the acoustic model will improve. This implies several variations: the pronunciation of the person training the model, the dialect used, etc. Nowadays, Julius doesn't count with any model good enough for continuous dictation in English. | ||

| + | |||

| + | Due to the different problems presented by this kind of recognition, we chose the second option. We designed a grammar using the Voxforce acoustic model based in Markov's Hidden Models. | ||

| + | To do this, we need the following file: <br><br> | ||

| + | -file .dict:a list of all the words that we want our culture to recognize and its corresponding decomposition into phonemes. | ||

| + | |||

| + | <!-- | ||

| + | Un modelo acústico es un fichero que contiene una representación estadística de cada uno de los diferentes sonidos que conforman una palabra (fonemas). Julius permite reconocer voz mediante dictado continuo o mediante el uso de una gramática previamente introducida. Sin embargo el uso del dictado continuo conlleva un problema. Para ello es necesario un modelo acústico entrenado con una gran cantidad de ficheros de voz. A mayor número de ficheros de sonido conteniendo voces y textos diferentes mayor ratio de acierto tendrá el modelo acústico. Esto conllevo muchas variaciones: la pronunciación de la persona que entrena el modelo, el dialecto utilizado,... Actualmente, Julius no dispone de un modelo en Inglés lo suficientemente bueno para dictado continuo. | ||

| + | |||

| + | Debido los diferentes problemas que conlleva este tipo de reconocimiento, nosotros optamos por la segunda opción. Diseñamos una gramática empleando el modelo acústico de Voxforce, basado en modelos ocultos de Markov. | ||

| + | Para ello es necesario el siguiente fichero: <br><br> | ||

| + | - fichero.dict: lista de todas las palabras que queremos que reconozca nuestro cultivo y su correspondiente descomposición en fonemas. | ||

| + | --> | ||

| + | |||

| + | <ul> | ||

| + | <li><b>Acoustic Analysis</b><br><br> | ||

| + | Acoustic models take the acoustic properties of the input signal. They acquire a group of vectors of certain characteristics that will later be compared with a group of | ||

| + | patterns that represent symbols of a phonetic alphabet and return the symbols which resembles them the most. This is the basis of the mathematical probabilistic | ||

| + | process called Hidden Markov Model. The acoustic analysis is based on the extraction of a vector similar to the input acoustic signal with the purpose of applying the | ||

| + | theory of pattern recognition. | ||

| + | |||

| + | This vector is a parametric representation of the acoustic signal, containing the most relevant information and storing as compressed as possible. | ||

| + | In order to obtain a good group of vectors, the signal is pre-processed, reducing background noise and correlation. | ||

| + | <br><br> | ||

| + | |||

| + | <li><b>HMM, Hidden Markov Model</b><br><br> | ||

| + | '''Hidden Markov Models (HMMs)''' provide a simple and effective framework | ||

| + | for modelling time-varying spectral vector sequences. As a consequence, | ||

| + | almost all present day large vocabulary continuous speech | ||

| + | recognition systems are based on '''HMMs'''.<br><br> | ||

| + | |||

| + | The core of all speech recognition systems consists of a set | ||

| + | of statistical models representing the various sounds of the language to | ||

| + | be recognised. Since speech has temporal structure and can be encoded | ||

| + | as a sequence of spectral vectors spanning the audio frequency range, | ||

| + | the hidden '''Markov model (HMM)''' provides a natural framework for | ||

| + | constructing such models.<br><br> | ||

| + | |||

| + | Basically, the input audio waveform from | ||

| + | a microphone is converted into a sequence of fixed size acoustic vectors | ||

| + | ''Y[1:T] = y1, . . . ,yT'' in a process called feature extraction. The decoder | ||

| + | then attempts to find the sequence of words ''w'[1:L] = w1,...,wL'' which | ||

| + | is most likely to have generated ''Y'' , i.e. the decoder tries to find.<br><br> | ||

| + | |||

| + | '' w' = argmax{P(w|Y)}'' for all the words w considered<br><br> | ||

| + | |||

| + | operating this ('''Bayes rule''') the likelihood ''p(Y|w)'' is determined by the | ||

| + | acoustic model and the prior ''P(w)'' is determined by the language model. | ||

| + | |||

| + | <br><br> | ||

| + | </ul> | ||

| + | |||

| + | <!-- | ||

| + | <ul> | ||

| + | <li>Análisis acústico: <br> | ||

| + | Los modelos acústicos toman las propiedades acústicas de la señal de entrada, obtienen un conjunto de vectores de características que después compararán con un | ||

| + | conjunto de patrones que representan los símbolos de un alfabeto fonético y devuelve los símbolos que más se parecen. En esto se basa el proceso matemático | ||

| + | probabilístico llamado Modelo Oculto de Markov. El análisis acústico se basa en la extracción de un vector de características de la señal acústica de entrada con el fin | ||

| + | de aplicar la teoría de reconocimiento de patrones. | ||

| + | |||

| + | Éste vector es una representación paramétrica de la señal acústica, conteniendo la información más importante y almacenándola de la forma más compacta posible. | ||

| + | Con el fin de extraer un buen conjunto de vectores, la señal es preprocesada reduciendo el ruido y la correlación. | ||

| + | <br><br> | ||

| + | |||

| + | <li><b>Modelos Ocultos de Markov (HMM, Hidden Markov Model):</b> <br> | ||

| + | Hidden Markov Models (HMMs) provide a simple and effective framework | ||

| + | for modelling time-varying spectral vector sequences. As a consequence, | ||

| + | almost all present day large vocabulary continuous speech | ||

| + | recognition systems are based on HMMs.<br><br> | ||

| + | |||

| + | The core of all speech recognition systems consists of a set | ||

| + | of statistical models representing the various sounds of the language to | ||

| + | be recognised. Since speech has temporal structure and can be encoded | ||

| + | as a sequence of spectral vectors spanning the audio frequency range, | ||

| + | the hidden Markov model (HMM) provides a natural framework for | ||

| + | constructing such models.<br><br> | ||

| + | |||

| + | Basically, the input audio waveform from | ||

| + | a microphone is converted into a sequence of fixed size acoustic vectors | ||

| + | Y[1:T] = y1, . . . ,yT in a process called feature extraction. The decoder | ||

| + | then attempts to find the sequence of words w'[1:L] = w1,...,wL which | ||

| + | is most likely to have generated Y , i.e. the decoder tries to find.<br><br> | ||

| + | |||

| + | w' = argmax{P(w|Y)} for all the words w considered<br><br> | ||

| + | |||

| + | operating this (Bayes rule) the likelihood p(Y|w) is determined by the | ||

| + | acoustic model and the prior P(w) is determined by the language model. | ||

| + | <br><br> | ||

| + | </ul> | ||

| + | --> | ||

| + | |||

| + | <b>Language model</b> | ||

| + | --------------------------------- | ||

| + | Language model refers to the grammar on which the recognizer will work and specify the phrases that it will be able to identify. | ||

| + | |||

| + | In Julius, recognition grammar is composed by two separate files:<br><br> | ||

| + | |||

| + | - '''.voca:''' List of words that the grammar contains. <br> | ||

| + | - '''.grammar:''' specifies the grammar of the language to be recognised.<br><br> | ||

| + | |||

| + | Both files must be converted to .dfa and to .dict using the grammar compilator "mkdfa.pl" | ||

| + | The .dfa file generated represents a finite automat. The .dict archive contains the dictionary of words in Julius format. | ||

| + | |||

| + | |||

| + | <!-- | ||

| + | El modelo de lenguaje hace referencia a la gramática sobre la que el reconocedor va a trabajar y especificará las frases que podrá identificar. | ||

| + | En Julius, la gramática de reconocimiento se compone por dos archivos separados: <br><br> | ||

| + | - fichero . voca: listado de las palabras que contendrá la gramática. <br> | ||

| + | - fichero .grammar: Se especifica la gramática del lenguaje a reconocer. <br><br> | ||

| + | Ambos archivos deben ser convertidos a .dfa y a .dict utilizando el compilador de gramáticas "mkdfa.pl" | ||

| + | El archivo generado .dfa representa un autómata finito y el archivo .dict contiene el diccionario de palabras en el formato de Julius. | ||

| + | --> | ||

| + | |||

| + | <br> | ||

| + | <html> | ||

| + | <center> | ||

| + | <img src="https://static.igem.org/mediawiki/2012/5/59/Grammar.png" height="230" width="280"> | ||

| + | </center> | ||

| + | </html> | ||

| + | <br><br> | ||

| + | |||

| + | Once the acoustic language and the language model have been defined, all we need is the implementation of the main program in Python. | ||

| + | |||

| + | <!-- | ||

| + | Una vez hemos definido el modelo acústico y el modelo de lenguaje, únicamente falta la implementación del programa principal en Phyton. | ||

| + | En él ejecutamos Julius y a través del reconocimiento de voz identificamos la pregunta realizada al cultivo. | ||

| + | --> | ||

| + | <!-- | ||

| + | %%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% | ||

| + | --> | ||

| + | |||

| + | === '''Arduino''' === | ||

| + | |||

| + | <br> | ||

| + | |||

| + | [[Image:arduino.png|700 px||center]] | ||

| + | |||

| + | |||

| + | |||

| + | The '''Arduino Uno''' is a microcontroller board based on the '''ATmega328''' (datasheet). It has 14 digital input/output pins (of which 6 can be used as PWM outputs), 6 analog inputs, a 16 MHz crystal oscillator, a USB connection, a power jack, an ''ICSP header'', and a reset button. It contains everything needed to support the microcontroller; simply connect it to a computer with a USB cable or power it with a ''AC-to-DC'' adapter or battery to get started. | ||

| + | |||

| + | The'' Uno'' differs from all preceding boards in that it does not use the ''FTDI USB-to-serial driver chip''. Instead, it features the '''Atmega16U2''' (Atmega8U2 up to version R2) programmed as a USB-to-serial converter. | ||

| + | |||

| + | Revision 2 of the Uno board has a resistor pulling the ''8U2 HWB line'' to ground, making it easier to put into ''DFU mode.'' | ||

| + | |||

| + | Revision 3 of the board has the following new features: | ||

| + | <ol> | ||

| + | <li>'''1.0 pinout''': added '''SDA''' and '''SCL pins''' that are near to the AREF pin and two other new pins placed near to the ''RESET pin'', the ''IOREF'' that allow the shields to adapt to the voltage provided from the board. In future, shields will be compatible both with the board that use the ''AVR'', which operate with 5V and with the '''Arduino Due''' that operate with 3.3V. The second one is a not connected pin, that is reserved for future purposes. | ||

| + | |||

| + | <li> Stronger RESET circuit. | ||

| + | <li> Atmega 16U2 replace the 8U2. | ||

| + | </ol> | ||

| + | |||

| + | "Uno" means one in Italian and is named to mark the upcoming release of '''Arduino 1.0.''' The Uno and version 1.0 will be the reference versions of '''Arduino''', moving forward. The Uno is the latest in a series of USB Arduino boards, and the reference model for the ''Arduino platform''; for a comparison with previous versions, see the index of ''Arduino boards''. | ||

| + | |||

| + | <!-- | ||

| + | %%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% | ||

| + | --> | ||

| + | |||

| + | === '''Fluorimeter''' === | ||

| + | <br> | ||

| + | A fluorimeter is an electronic device that can read the light and transform it in an electrical signal. In our project, that light proceeds from a bacterial culture that has been excited. | ||

| + | |||

| + | |||

| + | Its principle of operation is as follows: | ||

| + | |||

| + | First, an electrical signal is sent from '''Arduino''' to a LED to activate it. This LED has a specific wavelength to excite the fluorescent protein present in the microbial culture. | ||

| + | |||

| + | |||

| + | When these bacteria are shining, we receive that light intensity with a photodiode, and transform it into electrical current. Now, we have an electrical current that is proportional with the light and therefor to the concentration of fluorescent protein present in the culture. We have to be careful with the orientation between the LED and the photodiode because interferences may occur in the measurement due to light received by photodiode but not emitted by the fluorescence in the culture but scattered light emitted by the LED. Also, a proper band-pass filter has been placed between culture and photodiode to allow only the desired wavelengths to pass through. | ||

| + | |||

| + | |||

| + | [[File:Fluorimetro1.gif|center]]<br> | ||

| + | |||

| + | |||

| + | The electric current coming from the photodiode has a very small amplitude, thereby we have to amplify it and translate it into a electric voltage with an electronic trans-impedance amplifier. We can choose the gain of this amplification changing the value of resistances of the circuit. The resistances must be properly chosen to select the desired amplification gain. Excessive amplification will not only amplify the signal but also amplify the noise, which is no desired in the measurement. <br> | ||

| + | |||

| + | |||

| + | [[File:Fluorimetro2.jpg|center|400px]]<br> | ||

| + | |||

| + | |||

| + | Finally, the output voltage is sent to '''Arduino''', and it will translate that in a digital signal.<br> | ||

| + | |||

| + | |||

| + | [[File:Fluorimetro3.jpg|center|400px]]<br> | ||

| + | |||

| + | |||

| + | The original idea was taken from ''[1]''.<br><br><br> | ||

| + | |||

| + | === '''Light Mediated Translator (LMT)''' === | ||

| + | |||

| + | <div id="PorDefecto"> | ||

| + | <html> | ||

| + | <table style="background-color:transparent"> | ||

| + | <tr> | ||

| + | <br> | ||

| + | <td width ="300" align="justify">As a final step, we are working in the design of a <i>humanoid chassis</i> that will friendly mediate the communication between humans and microorganisms. We thought in a <b>C3PO</b>-like bust because it is a universal translator -over <i>6000 languages</i>- and is a well-known sci-fi character.<br><br> | ||

| + | |||

| + | In the image on the right, you can see some of the elements of the fluorimeter as well as the <i>C3PO mask</i> (on the left) and the mannequin (in the background) we will use to construct the humanoid interface.<br><br> | ||

| + | |||

| + | This chassis will host our home-made fluorimeter (still under construction). When finished, it will allow an easy -and funny- communication with <b>our culture</b>! | ||

| + | <br><br><br><br><br><br><br><br> | ||

| + | </td> | ||

| + | <td width ="400" align="center"><img src="https://static.igem.org/mediawiki/2012/7/78/ZG36O.jpeg" width="350" height="450" BORDER=0></td> | ||

| + | </tr> | ||

| + | </table> | ||

| + | </html> | ||

| + | |||

| + | <br> | ||

| - | + | ''[1]'' Benjamin T. Wigton etal. ''Low-Cost, LED Fluorimeter for Middle School, High School, and Undergraduate Chemistry Lab'', J. Chem. Educ. 2011, 88, 1182-1187. | |

Latest revision as of 23:44, 26 September 2012

- The input used is a voice signal (question), which will be collected by our voice recognizer.

- The voice recognizer identifies the question and, through the program in charge of establishing the communication, its corresponding identifier is written in the assigned port of the arduino.

- The software of the arduino reads the written identifier and, according to it, the corresponding port is selected, indicating the flourimeter which wavelength has to be emitted on the culture. There are four possible questions (q), and each of them is associated to a different wavelength.

- The fluorimeter emits light (BioInput), exciting the compound through optic filters.

- Due to the excitation produced, the compound emits fluorescence (BioOutput), which is measured by the fluorimeter with a sensor.

- This fluorescence corresponds to one of the four possible answers (r: response).

- The program of the arduino identifies the answer and writes its identifier in the corresponding port.

- The communication program reads the identifier of the answer from the port.

- "Espeak" emits the answer via a voice signal (Output).

- Acoustic Analysis

Acoustic models take the acoustic properties of the input signal. They acquire a group of vectors of certain characteristics that will later be compared with a group of patterns that represent symbols of a phonetic alphabet and return the symbols which resembles them the most. This is the basis of the mathematical probabilistic process called Hidden Markov Model. The acoustic analysis is based on the extraction of a vector similar to the input acoustic signal with the purpose of applying the theory of pattern recognition. This vector is a parametric representation of the acoustic signal, containing the most relevant information and storing as compressed as possible. In order to obtain a good group of vectors, the signal is pre-processed, reducing background noise and correlation.

- HMM, Hidden Markov Model

Hidden Markov Models (HMMs) provide a simple and effective framework for modelling time-varying spectral vector sequences. As a consequence, almost all present day large vocabulary continuous speech recognition systems are based on HMMs.

The core of all speech recognition systems consists of a set of statistical models representing the various sounds of the language to be recognised. Since speech has temporal structure and can be encoded as a sequence of spectral vectors spanning the audio frequency range, the hidden Markov model (HMM) provides a natural framework for constructing such models.

Basically, the input audio waveform from a microphone is converted into a sequence of fixed size acoustic vectors Y[1:T] = y1, . . . ,yT in a process called feature extraction. The decoder then attempts to find the sequence of words w'[1:L] = w1,...,wL which is most likely to have generated Y , i.e. the decoder tries to find.

w' = argmax{P(w|Y)} for all the words w considered

operating this (Bayes rule) the likelihood p(Y|w) is determined by the acoustic model and the prior P(w) is determined by the language model.

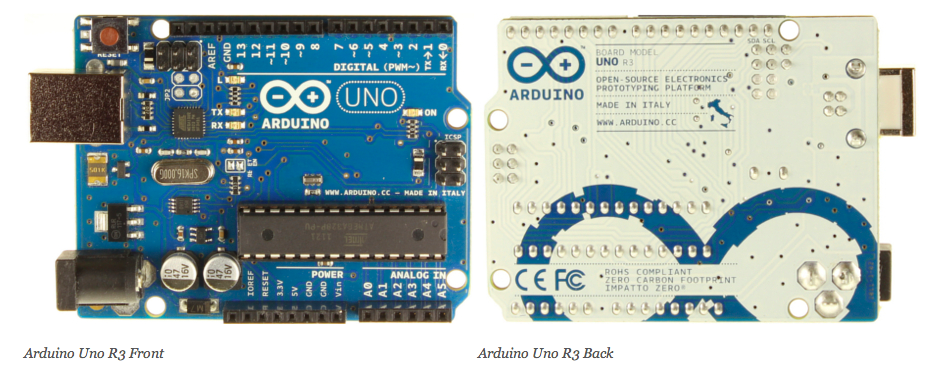

- 1.0 pinout: added SDA and SCL pins that are near to the AREF pin and two other new pins placed near to the RESET pin, the IOREF that allow the shields to adapt to the voltage provided from the board. In future, shields will be compatible both with the board that use the AVR, which operate with 5V and with the Arduino Due that operate with 3.3V. The second one is a not connected pin, that is reserved for future purposes.

- Stronger RESET circuit.

- Atmega 16U2 replace the 8U2.

Talking Interfaces

THE PROCESS

The main objective of our project is to accomplish a verbal communication with our microorganisms.

To do that, we need to establish the following process:

You can see an explicative animation of this process here:

In the following sections we analyse in detail the main elements used in the process:

Voice recognizer

Julius is a continuous speech real-time recongizer engine. It is based on Markov's interpretation of hidden models. It's opensource and distributed with a BSD licence. Its main platfomorm is Linux and other Unix systems, but it also works in Windows. It has been developed as a part of a free software kit for research in large-vocabulary continuous speech recognition (LVCSR) from 1977, and the Kyoto University of Japan has continued the work from 1999 to 2003.

In order to use Julius, it is necessary to create a language model and an acoustic model. Julius adopts the acoustic models and the pronunctiation dictionaries from the HTK software, which is not opensource, but can be used and downloaded for its use and posterior generation of acoustic models.

Acoustic model

An acoustic model is a file which contains an statistical representation of each of the different sounds that form a word (phoneme). Julius allows voice recognition through continuous dictation or by using a previously introduced grammar. However, the use of continuous dictation carries a problem. It requires an acoustic model trained with lots of voice files. As the amount of sound files containing voices and different texts increases, the ratio of good hits of the acoustic model will improve. This implies several variations: the pronunciation of the person training the model, the dialect used, etc. Nowadays, Julius doesn't count with any model good enough for continuous dictation in English.

Due to the different problems presented by this kind of recognition, we chose the second option. We designed a grammar using the Voxforce acoustic model based in Markov's Hidden Models.

To do this, we need the following file:

-file .dict:a list of all the words that we want our culture to recognize and its corresponding decomposition into phonemes.

Language model

Language model refers to the grammar on which the recognizer will work and specify the phrases that it will be able to identify.

In Julius, recognition grammar is composed by two separate files:

- .voca: List of words that the grammar contains.

- .grammar: specifies the grammar of the language to be recognised.

Both files must be converted to .dfa and to .dict using the grammar compilator "mkdfa.pl" The .dfa file generated represents a finite automat. The .dict archive contains the dictionary of words in Julius format.

Once the acoustic language and the language model have been defined, all we need is the implementation of the main program in Python.

Arduino

The Arduino Uno is a microcontroller board based on the ATmega328 (datasheet). It has 14 digital input/output pins (of which 6 can be used as PWM outputs), 6 analog inputs, a 16 MHz crystal oscillator, a USB connection, a power jack, an ICSP header, and a reset button. It contains everything needed to support the microcontroller; simply connect it to a computer with a USB cable or power it with a AC-to-DC adapter or battery to get started.

The Uno differs from all preceding boards in that it does not use the FTDI USB-to-serial driver chip. Instead, it features the Atmega16U2 (Atmega8U2 up to version R2) programmed as a USB-to-serial converter.

Revision 2 of the Uno board has a resistor pulling the 8U2 HWB line to ground, making it easier to put into DFU mode.

Revision 3 of the board has the following new features:

"Uno" means one in Italian and is named to mark the upcoming release of Arduino 1.0. The Uno and version 1.0 will be the reference versions of Arduino, moving forward. The Uno is the latest in a series of USB Arduino boards, and the reference model for the Arduino platform; for a comparison with previous versions, see the index of Arduino boards.

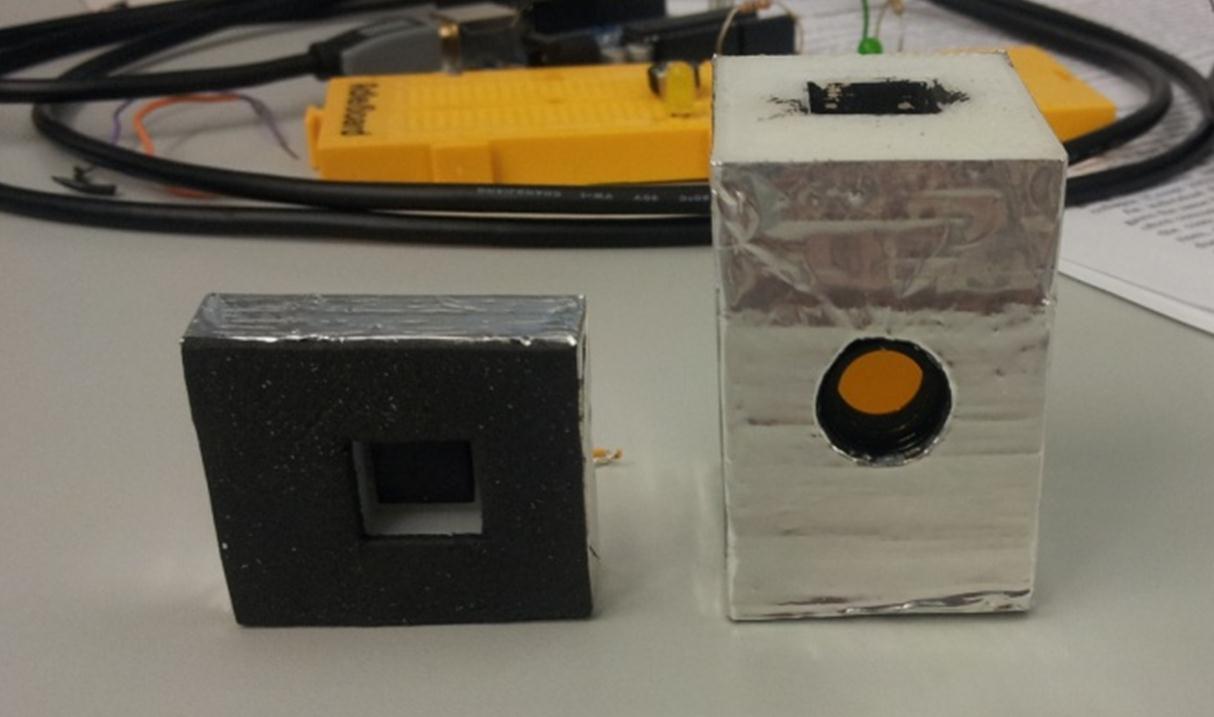

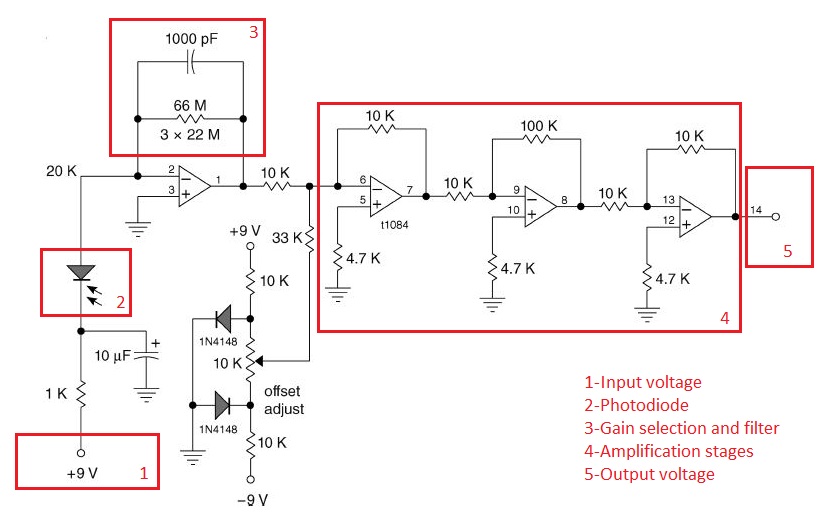

Fluorimeter

A fluorimeter is an electronic device that can read the light and transform it in an electrical signal. In our project, that light proceeds from a bacterial culture that has been excited.

Its principle of operation is as follows:

First, an electrical signal is sent from Arduino to a LED to activate it. This LED has a specific wavelength to excite the fluorescent protein present in the microbial culture.

When these bacteria are shining, we receive that light intensity with a photodiode, and transform it into electrical current. Now, we have an electrical current that is proportional with the light and therefor to the concentration of fluorescent protein present in the culture. We have to be careful with the orientation between the LED and the photodiode because interferences may occur in the measurement due to light received by photodiode but not emitted by the fluorescence in the culture but scattered light emitted by the LED. Also, a proper band-pass filter has been placed between culture and photodiode to allow only the desired wavelengths to pass through.

The electric current coming from the photodiode has a very small amplitude, thereby we have to amplify it and translate it into a electric voltage with an electronic trans-impedance amplifier. We can choose the gain of this amplification changing the value of resistances of the circuit. The resistances must be properly chosen to select the desired amplification gain. Excessive amplification will not only amplify the signal but also amplify the noise, which is no desired in the measurement.

Finally, the output voltage is sent to Arduino, and it will translate that in a digital signal.

The original idea was taken from [1].

Light Mediated Translator (LMT)

| As a final step, we are working in the design of a humanoid chassis that will friendly mediate the communication between humans and microorganisms. We thought in a C3PO-like bust because it is a universal translator -over 6000 languages- and is a well-known sci-fi character. In the image on the right, you can see some of the elements of the fluorimeter as well as the C3PO mask (on the left) and the mannequin (in the background) we will use to construct the humanoid interface. This chassis will host our home-made fluorimeter (still under construction). When finished, it will allow an easy -and funny- communication with our culture! |

|

"

"