Team:TU Darmstadt/Protocols/Information theory

From 2012.igem.org

| Line 23: | Line 23: | ||

<li><a href="/Team:TU_Darmstadt/Labjournal" title="Labjournal">Labjournal</a></li> | <li><a href="/Team:TU_Darmstadt/Labjournal" title="Labjournal">Labjournal</a></li> | ||

<li><a href="/Team:TU_Darmstadt/Protocols" title="Protocols">Protocols</a></li> | <li><a href="/Team:TU_Darmstadt/Protocols" title="Protocols">Protocols</a></li> | ||

| + | <li><a href="/Team:TU_Darmstadt/Materials" title="Materials">Materials</a></li> | ||

| + | <li><a href="/Team:TU_Darmstadt/Modeling" title="Modeling">Modeling</a></li> | ||

<li><a href="/Team:TU_Darmstadt/Safety" title="Safety">Safety</a></li> | <li><a href="/Team:TU_Darmstadt/Safety" title="Safety">Safety</a></li> | ||

<li><a href="/Team:TU_Darmstadt/Downloads" title="Downloads">Downloads</a></li></ul></li> | <li><a href="/Team:TU_Darmstadt/Downloads" title="Downloads">Downloads</a></li></ul></li> | ||

| Line 30: | Line 32: | ||

<li><a href="/Team:TU_Darmstadt/Contact" title="Contact">Benefits</a></li></ul></li> | <li><a href="/Team:TU_Darmstadt/Contact" title="Contact">Benefits</a></li></ul></li> | ||

</ul> | </ul> | ||

| + | <div id="igem"><a href="https://2012.igem.org/Main_Page"></a></div> | ||

</div> | </div> | ||

<!-- end #menu --> | <!-- end #menu --> | ||

Latest revision as of 21:30, 26 September 2012

Contents |

Information Theory

Entropy

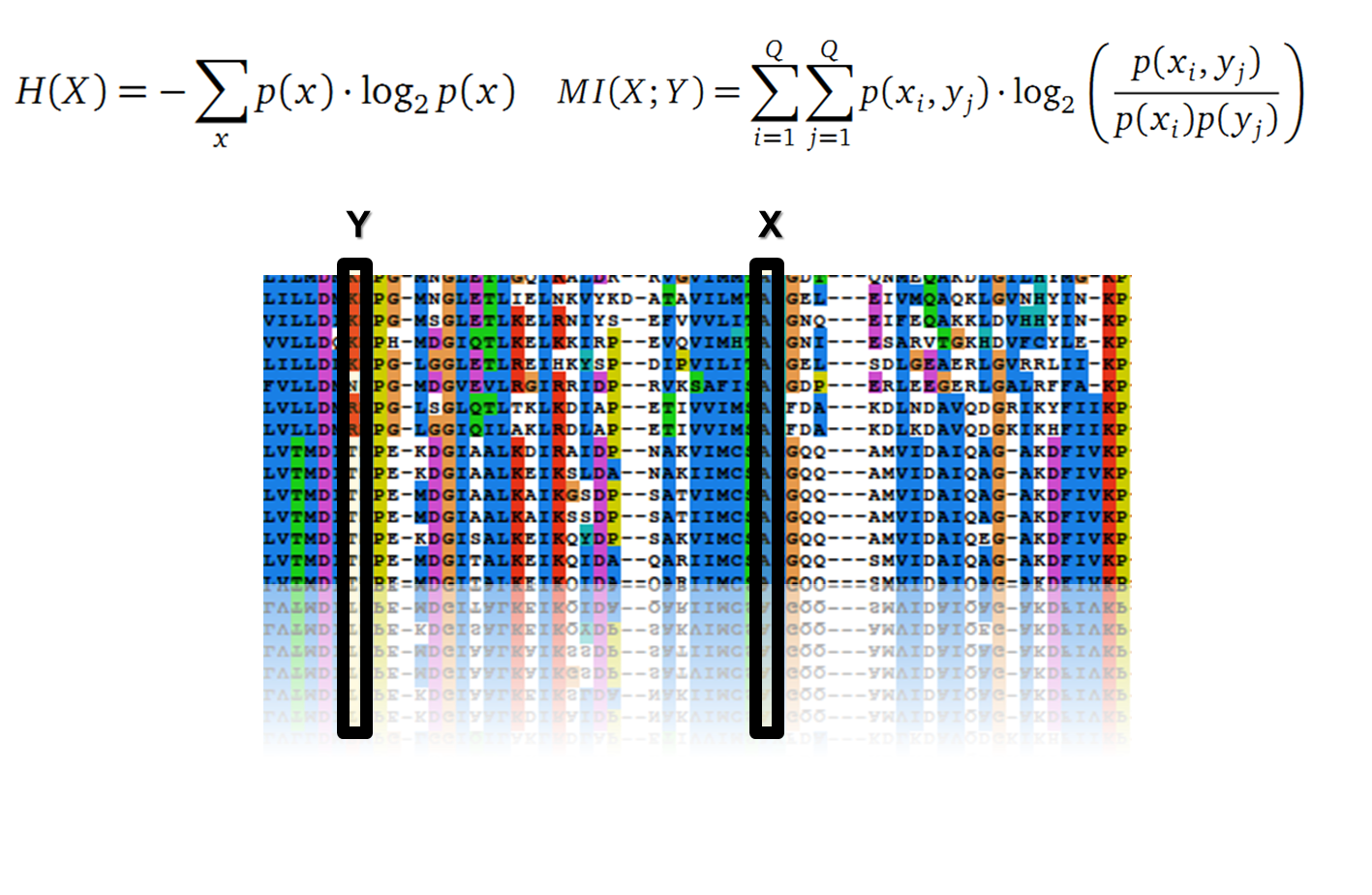

Claude Shannon created a new measurement approach of uncertainty of a random variable X. This measurement is called Shannon’s entropy H [1] which is measured in bit, if a logarithm to the base 2 is used. p(x) denotes the probability mass function of a random variable X.

<math>H(X)=-\sum\limits_{x}p(x)\cdot \log_{2} p(x)<math>

Mutual Information

In information theory, Mutual information (MI) is a correlations measure of two random variables X and Y . H(X) and H(Y ) are the Shannon entropy values of the random variables X and Y. H(X, Y ) is the two-point entropy. Moreover , the MI quantifies the amount of information of variable X by knowing Y and vice versa. <math>MI(X;Y)=H(X)+H(Y)-H(X,Y)<math>

Application of MI to sequence Alignments

It is well known that the MI can be used to measure co-evolution signals in multiple sequence alignments (MSA)[2] [3] . An MSA serves as a comparison of three or more sequences used to investigate the functional or evolutionary homology of amino acid or nucleotide sequences. The MI of an MSA can be computed with the following equation derived from the Kullback-Leibler-Divergence:

<math>MI(X;Y)= \sum\limits_{i=1}^{Q}\sum\limits_{j=1}^{Q}p(x_{i},y_{j})\cdot \log_{2}\left( \frac{p(x_{i},y_{j})}{p(x_{i})p(y_{j})}\right)<math>

with p(x) and p( y) being the frequency counts of symbols in column X and Y of the MSA. The joint frequency describe the occurrence for the amino acids xi and yj(p(x, y)) and Q is the set of Symbols derived from the corresponding alphabet (DNA or Protein). The result of these calculations is a symmetric matrix M which includes all combined MI values for any two columns in an MSA. A dependency of two columns acids shows high MI values.

Normalisation

A standard score (Z-score) indicates how many standard deviations a value differs from the mean of a normal distribution. MI dependent Z-scores can be calculated with a shuffle-null model, where the symbols in MSA column are shuffled and every dependencies of the column pairs are eliminated. The expectation value for the shuffle-null model is described by E(Mi j) and its corresponding variance by Var(Mi j) [4].

<math>Z_{ij}=\frac{M_{ij}-E(M_{ij})}{\sqrt{Var(M_{ij})}}<math>

References

[1] C. E. Shanon, “A Mathematical Theory of Communication,” The Bell System Technical Journal, vol. 27, pp. 379–423, 1948.

[2] K. Hamacher, “Relating sequence evolution of HIV1-protease to its underlying molecular mechanics.,” Gene, vol. 422, no. 1–2, pp. 30–36, 2008.

[3] F. H. K. H. P. Boba P. Weil, “Intra- and Inter-Molecular Co-Evolution: The Case of of HIV1 Protease and Reverse Transcriptase,” Springer Communications in Computer and Information Science, pp. 127:356–366, 2011.

[4] P. Weil, F. Hoffgaard, and K. Hamacher, “Estimating sufficient statistics in co-evolutionary analysis by mutual information.,” Comput Biol Chem, vol. 33, no. 6, pp. 440–444, 2009.

"

"