Team:Wellesley HCI/Methodology

From 2012.igem.org

| Line 161: | Line 161: | ||

<ul> | <ul> | ||

<li>During this phase, we conducted ongoing heuristic evaluations by testing our software with BU and MIT’s iGEM teams. We iterated on the visual design of our <a href="https://2012.igem.org/Team:Wellesley_HCI/MoClo_Planner">MoClo Planner</a> program as well as improved performance by making the search option more efficient, among many other small program changes that improved subject satisfaction with our software. | <li>During this phase, we conducted ongoing heuristic evaluations by testing our software with BU and MIT’s iGEM teams. We iterated on the visual design of our <a href="https://2012.igem.org/Team:Wellesley_HCI/MoClo_Planner">MoClo Planner</a> program as well as improved performance by making the search option more efficient, among many other small program changes that improved subject satisfaction with our software. | ||

| - | <li>We continued to interview experts in the areas of synthetic biology, and started considering questions about safety, collaboration, information sharing, and safety. | + | <li>We continued to interview experts in the areas of synthetic biology, and started considering questions about <a href="<a href="http://youtu.be/IPr-D4vAGAM">">safety</a>, collaboration, information sharing, and safety. |

</ul> | </ul> | ||

</p> | </p> | ||

| Line 172: | Line 172: | ||

<b>Finally, in the evaluation stage we deploy our refined software tools for use in the wet-lab and evaluate overall user satisfaction regarding the software tools created. </b> | <b>Finally, in the evaluation stage we deploy our refined software tools for use in the wet-lab and evaluate overall user satisfaction regarding the software tools created. </b> | ||

<ul> | <ul> | ||

| - | <li> | + | <li>Usability and usefulness: we conducted testing with the BU and MIT teams as well as with Wellesley biology students. We used various quantitative measures (e.g. time on task, subjective satisfaction) and qualitative indicators (e.g. user collaboration and problem solving styles). See results from the evaluation of <a href="https://2012.igem.org/Team:Wellesley_HCI/SynBio_Search#results">SynBio Search</a>, <a href="https://2012.igem.org/Team:Wellesley_HCI/MoClo_Planner#results">MoClo Planner</a>, and <a href="https://2012.igem.org/Team:Wellesley_HCI/SynFlo#results">SynFlo</a>. |

| - | < | + | |

</ul> | </ul> | ||

Revision as of 01:00, 4 October 2012

User-Centered Design

Overview

To have a successful software tool, as designers it is our responsibility to cater to the needs of the user. Throughout our projects, we applied a user-centered design (UCD) process in both our MS Surface and web-based tools as well as in our outreach program. In UCD, user input is extremely crucial throughout all the stages of the design process. The goal of UCD is to create tools that enhance the current intuitive behaviors and practices of the users instead of forcing users to change behaviors to adapt to our software. Thus, extensive attention is given to the feedback and opinions of our potential users- synthetic biologists- and improvements in the designs are made in each iteration of our software design to address the questions and problems brought up by our users each time.

Design Process

The user-centered design process we followed this year can be divided into four different steps: analysis, design, implementation, and evaluation. Following, we describe the key activities we employed in each stage.

Analysis

In the analysis phase we visited a variety of potential users for our software to understand the users’ profiles: their needs, requirements, and visions for the software we are to develop. In this phase there is also a lot of background research on competitive products, and start envisioning potential scenarios in which the synthetic biologists might use our software practically.

In the analysis phase we visited a variety of potential users for our software to understand the users’ profiles: their needs, requirements, and visions for the software we are to develop. In this phase there is also a lot of background research on competitive products, and start envisioning potential scenarios in which the synthetic biologists might use our software practically.

- Observed users: MIT iGEM team, BU iGEM team, introductory biology students from Wellesley College, MIT-Wellesley Upward Bound Program high school participants.

- We conducted focus groups and interviews with experts in the field of synthetic biology and biosafety, including from pharmaceutical companies such as Agilent Technologies or Sirtris Phamaceuticals.

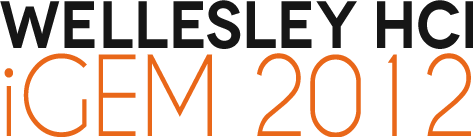

- In addition, the entire computational team participated first-hand in a synthetic biology experiment inspired by a previous iGEM project, to familiarize ourselves with the wet-lab environment and to understand some of the common safety precautions and lab managerial techniques biologists use while carrying out experiments.

- We set out on developing applications for the MS Surface, and looked at various previous applications dealing with organizing scientific data and visualizing abstract scientific processes, such as modular cloning. We also looked at different powerful Internet search engines and biology databases, and analyzed the benefits and detriments of each design before we creating SynBio Search. In preparation for our outreach program, we also looked at different educational games and interactive techniques that can be both MS Surface reliant but also kinesthetically interactive in nature.

- Throughout the analysis phase we created user profiles and made preliminary plans as to an analytical task for the users to test our designs.

Design

In the design phase we sit down with the users and brainstorm concepts for the design of the software, then create low-fidelity prototypes of the software. Collaborating with potential users then we start testing the usability of a higher-fidelity prototype for the design.

- We took the first week of our summer research program to brainstorm design concepts at Wellesley, and invited several collaborators to the brainstorming sessions. Students presented their findings for relevant works to the group, we brainstormed design concepts, and developed walkthroughs of design concepts through the creation of paper prototypes. After creating design sketches for our projects, we created low-fidelity prototypes, the first iterations, of our programs.

- During the design phase, we also conducted usability testing of MoClo Planner on our low-fidelity prototype with biology students at Wellesley College. Their feedback allowed us to quickly iterate on the design of our program. After testing SynFlo with students from Upward Bound, we were also able to iterate on our design. As SynBio Search was created towards the end of the summer session, we are still in the design and preliminary test stage for this program.

Implementation

With feedback from our users then we start the implementation stage, where we conduct further usability tests with synthetic biologists, and evaluate the visual design, performance, and efficiency of our tools.

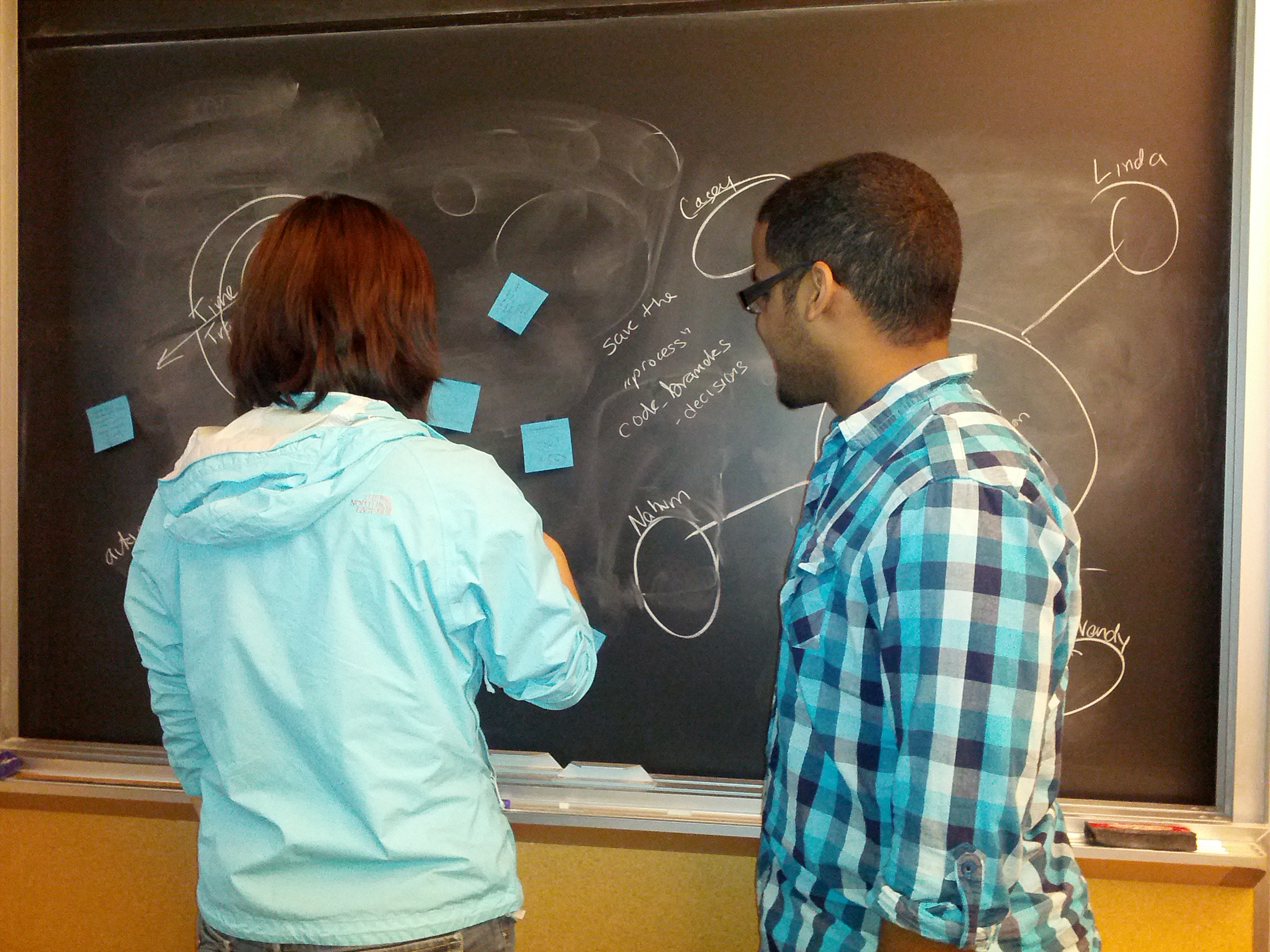

- During this phase, we conducted ongoing heuristic evaluations by testing our software with BU and MIT’s iGEM teams. We iterated on the visual design of our MoClo Planner program as well as improved performance by making the search option more efficient, among many other small program changes that improved subject satisfaction with our software.

- We continued to interview experts in the areas of synthetic biology, and started considering questions about ">safety, collaboration, information sharing, and safety.

Evaluation

Finally, in the evaluation stage we deploy our refined software tools for use in the wet-lab and evaluate overall user satisfaction regarding the software tools created.

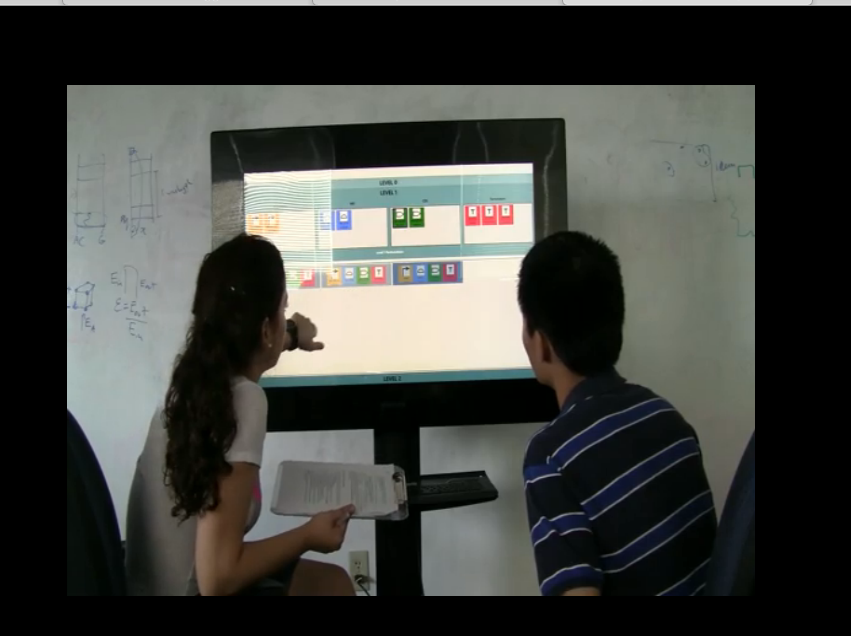

- Usability and usefulness: we conducted testing with the BU and MIT teams as well as with Wellesley biology students. We used various quantitative measures (e.g. time on task, subjective satisfaction) and qualitative indicators (e.g. user collaboration and problem solving styles). See results from the evaluation of SynBio Search, MoClo Planner, and SynFlo.

"

"